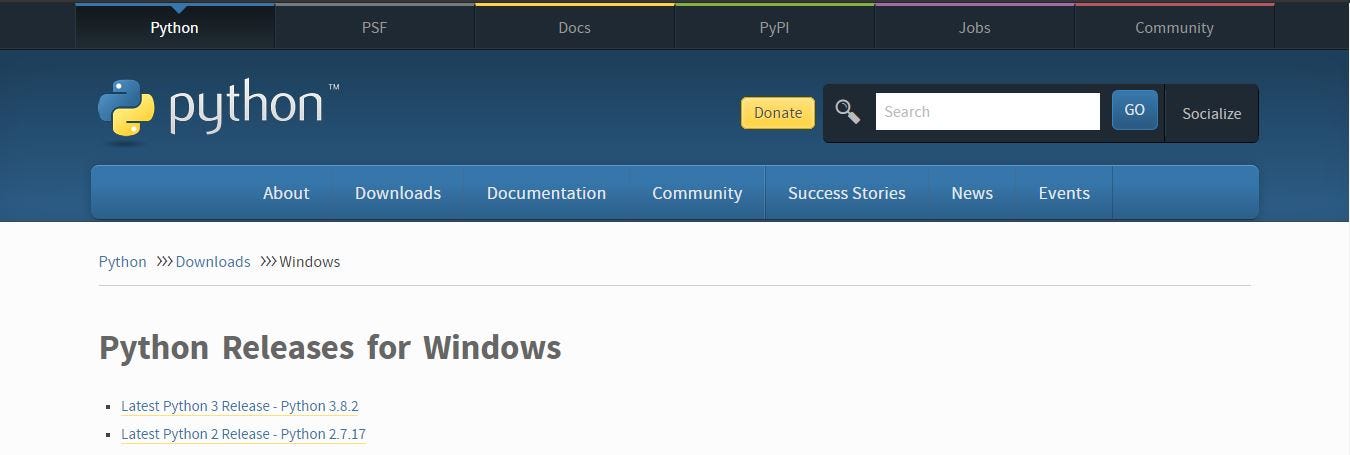

In this Python for Data Science tutorial, you will learn about Web scraping and Parsing in Python using Beautiful Soup (bs4) in Anaconda using Jupyter Noteb. How to use Pandas to scrape data from the web in Python Pandas which is a well-know library for data analysis in Python also contains robust functionality to read data from various external sources. Usually when Data Scientists think of Data Scraping, Beautiful Soup library is the one that comes to their mind.

Description:

In this 2-day (6-hour) workshop, participants will be introduced to the environment of Jupyter notebook, an interface for using Python, and the basic web scraping techniques using Python programming language. No previous experience with Python is required.

The goal is to equip participants with Python skills that will benefit their research. Sample data and worksheets will be provided. In the morning, we will focus on getting familiar with Jupyter notebook and Python as well as reading and importing data from various resources. In the afternoon, we will cover data management skills and web scraping. By the end of the workshop, participants should be able to run basic Python code and scrape a webpage using Python. If participants have specific questions about using Python for their own research projects following the workshop, they are encouraged to make appointments for individual consultations.

Instructor: Hsin Fei Tu

Hsin Fei Tu is a Methodology Consultant at ISSR and a PhD student in Sociology at UMass Amherst. She holds Masters degrees in Sociology and National development. She has taught a number of workshops on quantitative research methods, R, and social network analysis. Her research interests are inequality, research methodology, and social network analysis.

Questions? For more information about this or any of the ISSR Summer Methodology Workshops, please contact ISSR Director of Research Methods Programs, Jessica Pearlman (jpearlman@issr.umass.edu).

Please select the option for '6-Hour Workshop' when you register for this workshop.

Because we have calibrated workshop fees based on # of hours, those wishing to take more than one workshop MUST REGISTER SEPARATELY FOR EACH ONE. DO NOT CHECK MULTIPLE WORKSHOPS when you register for this one.

Five College Students and Faculty

- Five College Undergraduate and Graduate Students: $75/person

- Five College Faculty: $125/person

Non-Five College Students and Faculty

- Non-Five College Undergraduate and Graduate Students: $150/person

- Non-Five College Faculty, Staff and Other Professionals: $200/person

Registration note:The Five Colleges include: UMass Amherst, Amherst College, Hampshire College, Mount Holyoke College, and Smith College. Registration closes for each workshop 2 full business days prior to the start date. Please note: payment of registration does not guarantee a spot in these space-limited workshops. You will receive an email confirming your successful registration if accepted. If a workshop you have registered for is over-subscribed, you will be refunded and offered placement on a wait-list. If paying with departmental funds or personal checks, contact Karen Mason (mason@issr.umass.edu).

Cancellation note:In cases where enrollment is 5 or less, we reserve the right to cancel the workshop. In cases where the registrant cancels prior to the workshop, a full refund will be given with two weeks notice, and 50% refund will be given with one week notice. We will not be able to refund in cases where registrant does not notify us of cancellation at least one week prior to the beginning date of the workshop.

How can we scrape a single website?In this case, we don’t want to follow any links. The topic of following links I will describe in another blog post.

News bar myanmar. First of all, we will use Scrapy running in Jupyter Notebook.Unfortunately, there is a problem with running Scrapy multiple times in Jupyter. I have not found a solution yet, so let’s assume for now that we can run a CrawlerProcess only once.

In the first step, we need to define a Scrapy Spider. It consists of two essential parts: start URLs (which is a list of pages to scrape) and the selector (or selectors) to extract the interesting part of a page. In this example, we are going to extract Marilyn Manson’s quotes from Wikiquote.

Let’s look at the source code of the page. The content is inside a div with “mw-parser-output” class. Every quote is in a “li” element. We can extract them using a CSS selector.

What do we see in the log output? Things like this:

It is not a perfect output. I don’t want the source of the quote and HTML tags. Let’s do it in the most trivial way because it is not a blog post about extracting text from HTML. Quickdrawlib. I am going to split the quote into lines, select the first one and remove HTML tags.

The proper way of doing processing of the extracted content in Scrapy is using a processing pipeline. As the input of the processor, we get the item produced by the scraper and we must produce output in the same format (for example a dictionary).

It is easy to add a pipeline item. It is just a part of the custom_settings.

There is one strange part of the configuration. What is the in the dictionary? What does it do? According to the documentation: “The integer values you assign to classes in this setting determine the order in which they run: items go through from lower valued to higher valued classes.”

What does the output look like after adding the processing pipeline item?

Jupyter Notebook Syntax

Much better, isn’t it?

I want to store the quotes in a CSV file. We can do it using a custom configuration. We need to define a feed format and the output file name.

There is one annoying thing. Scrapy logs a vast amount of information.

Why Use Jupyter Notebook

Fortunately, it is possible to define the log level in the settings too. We must add this line to custom_settings:

Web Scraping Using Python Jupiter Notebook Tutorial

Remember to import logging!